| Xiaoying Wei |

| xweias@connect.ust.hk |

|

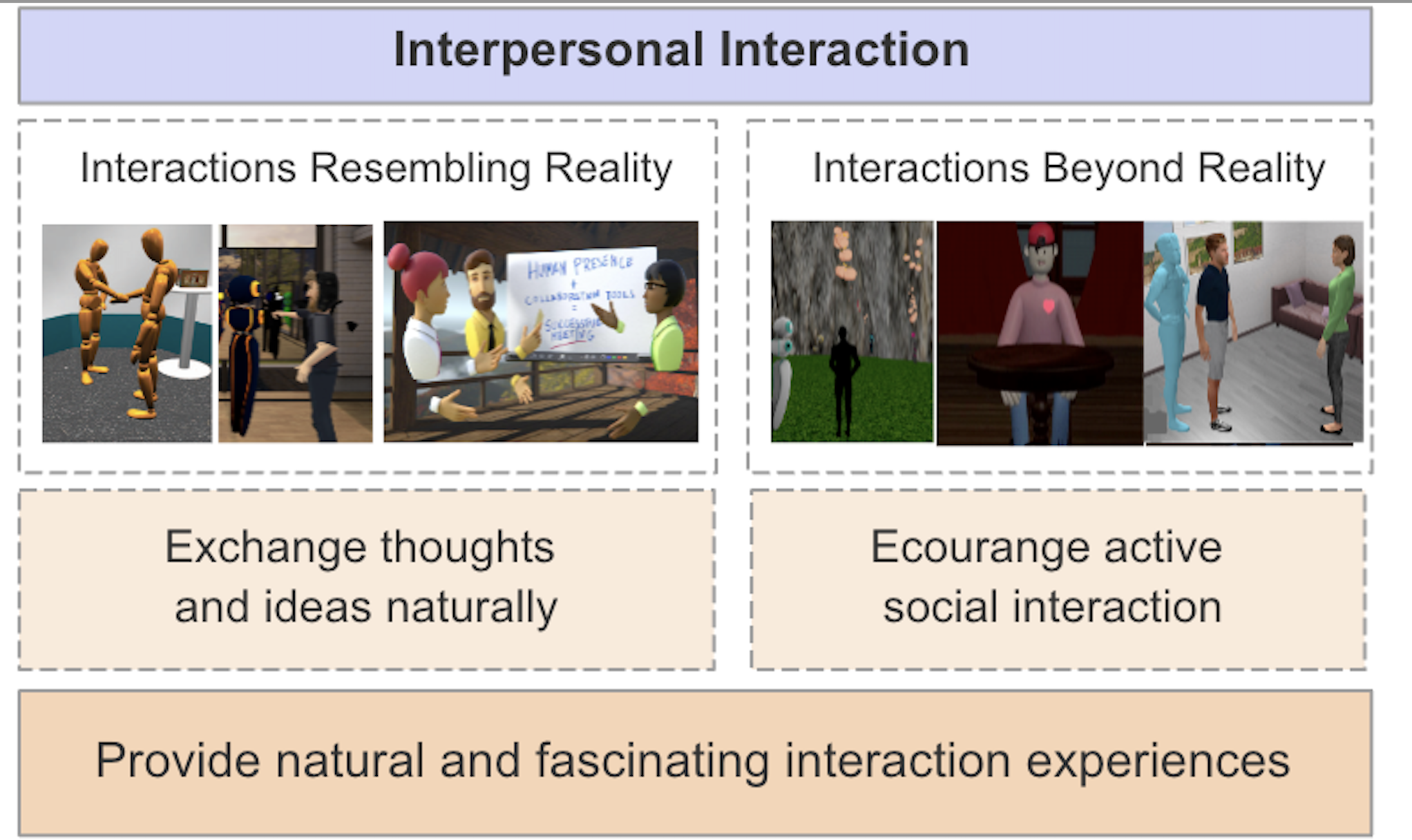

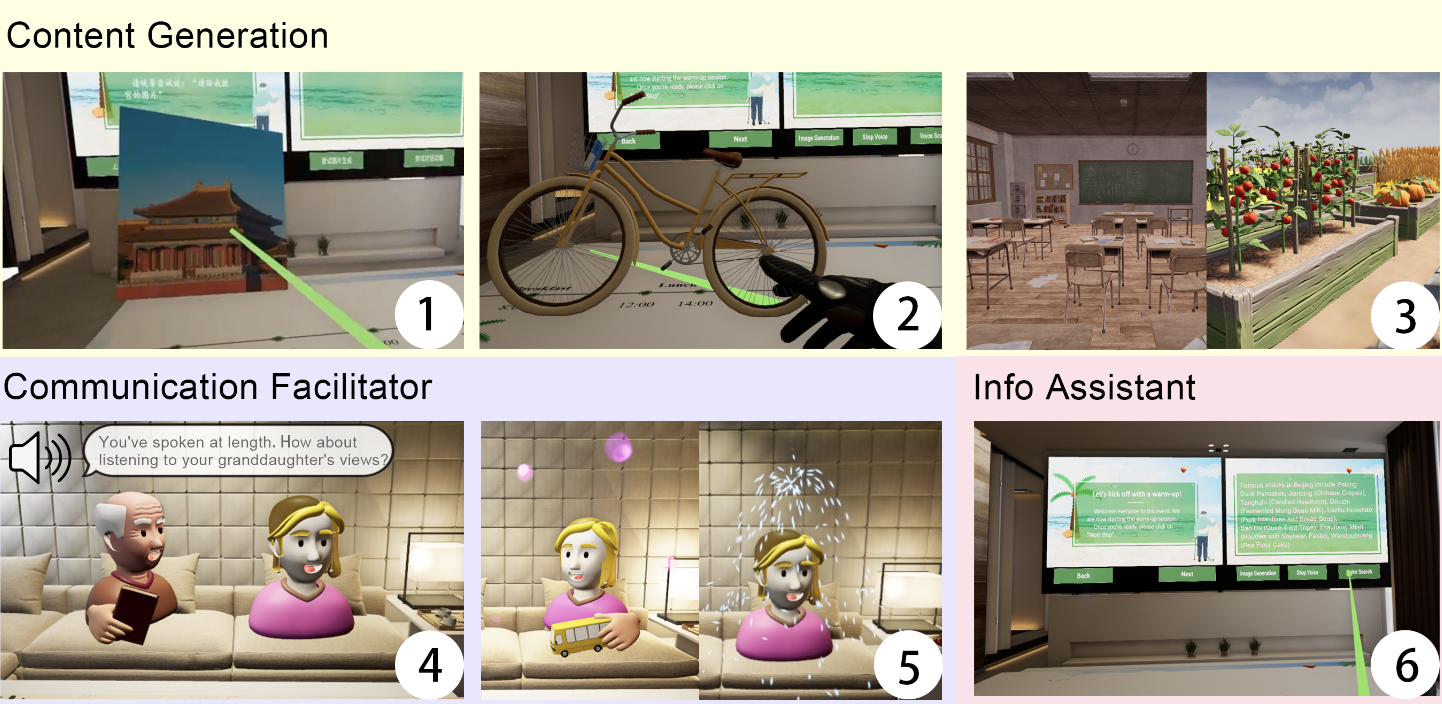

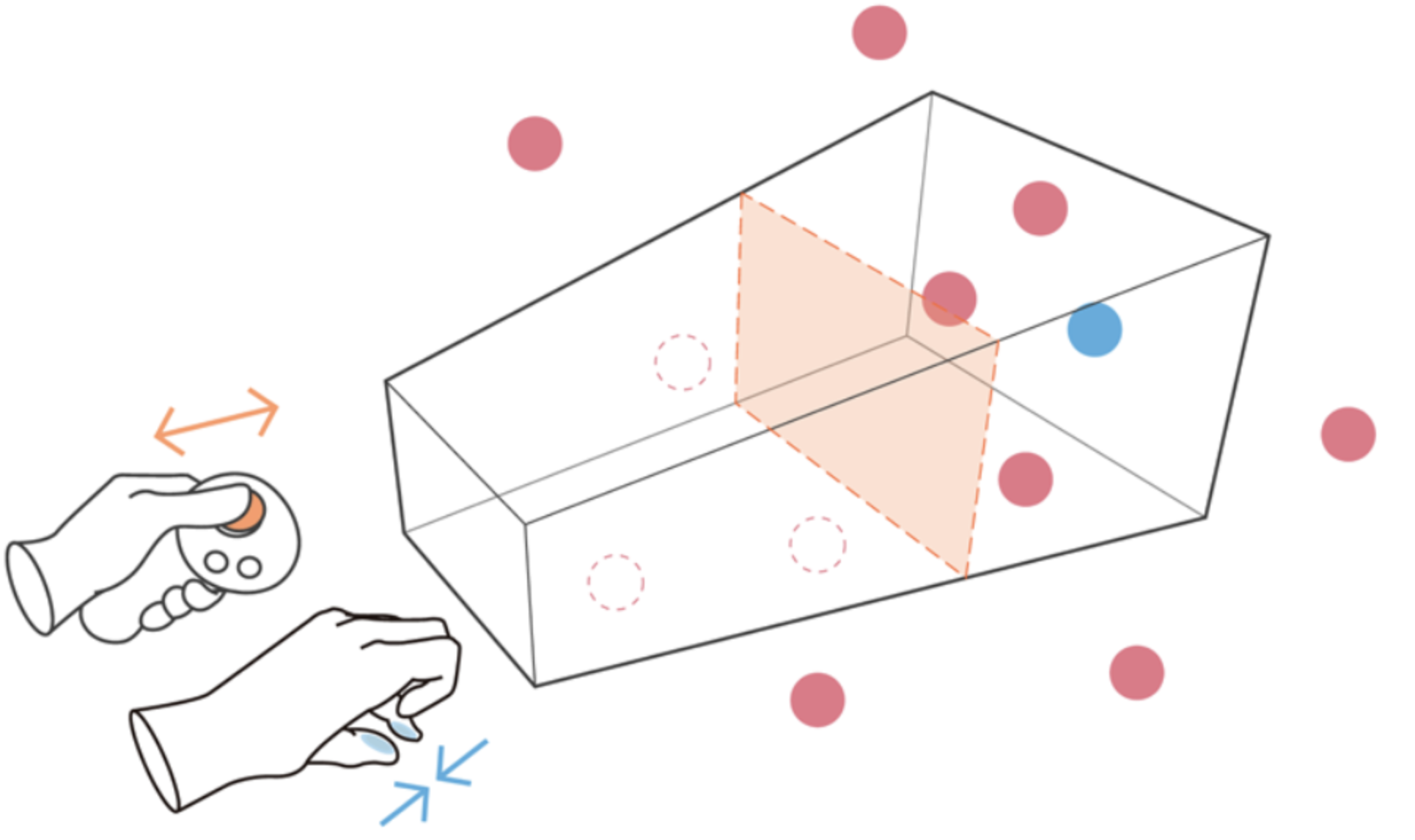

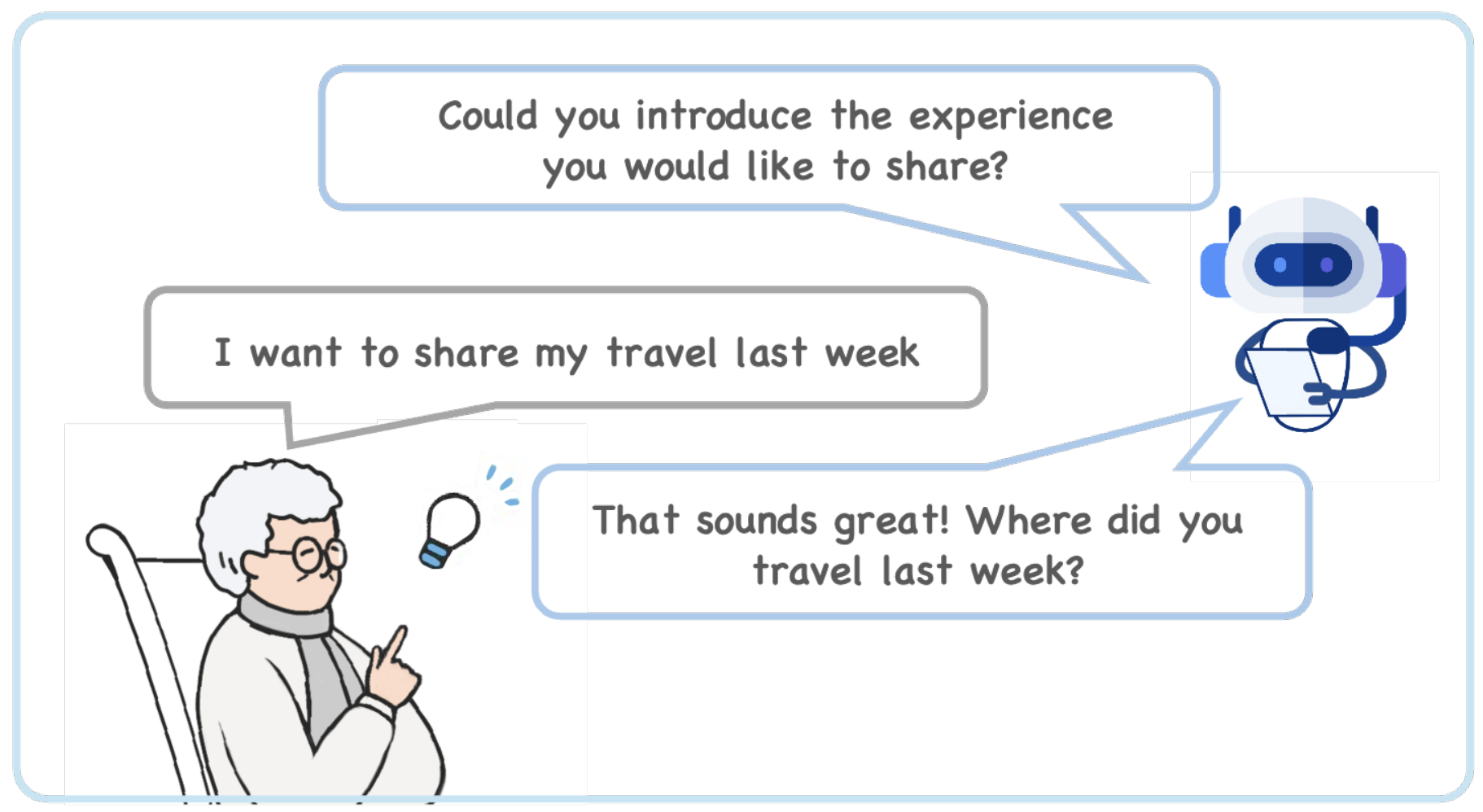

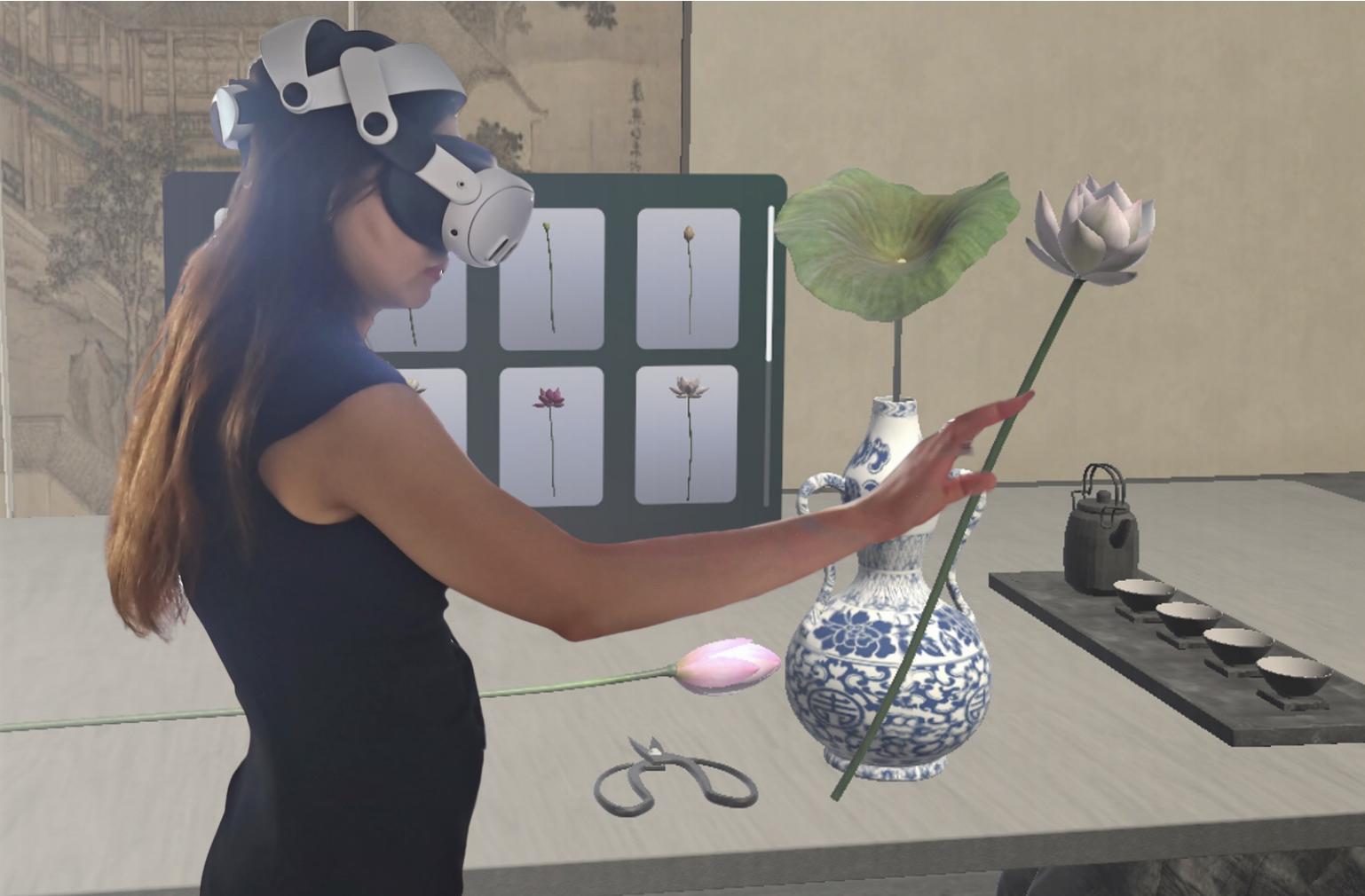

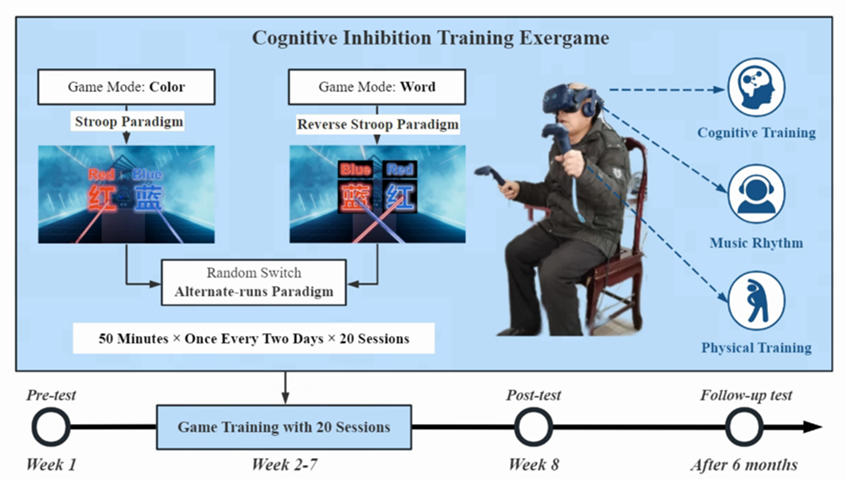

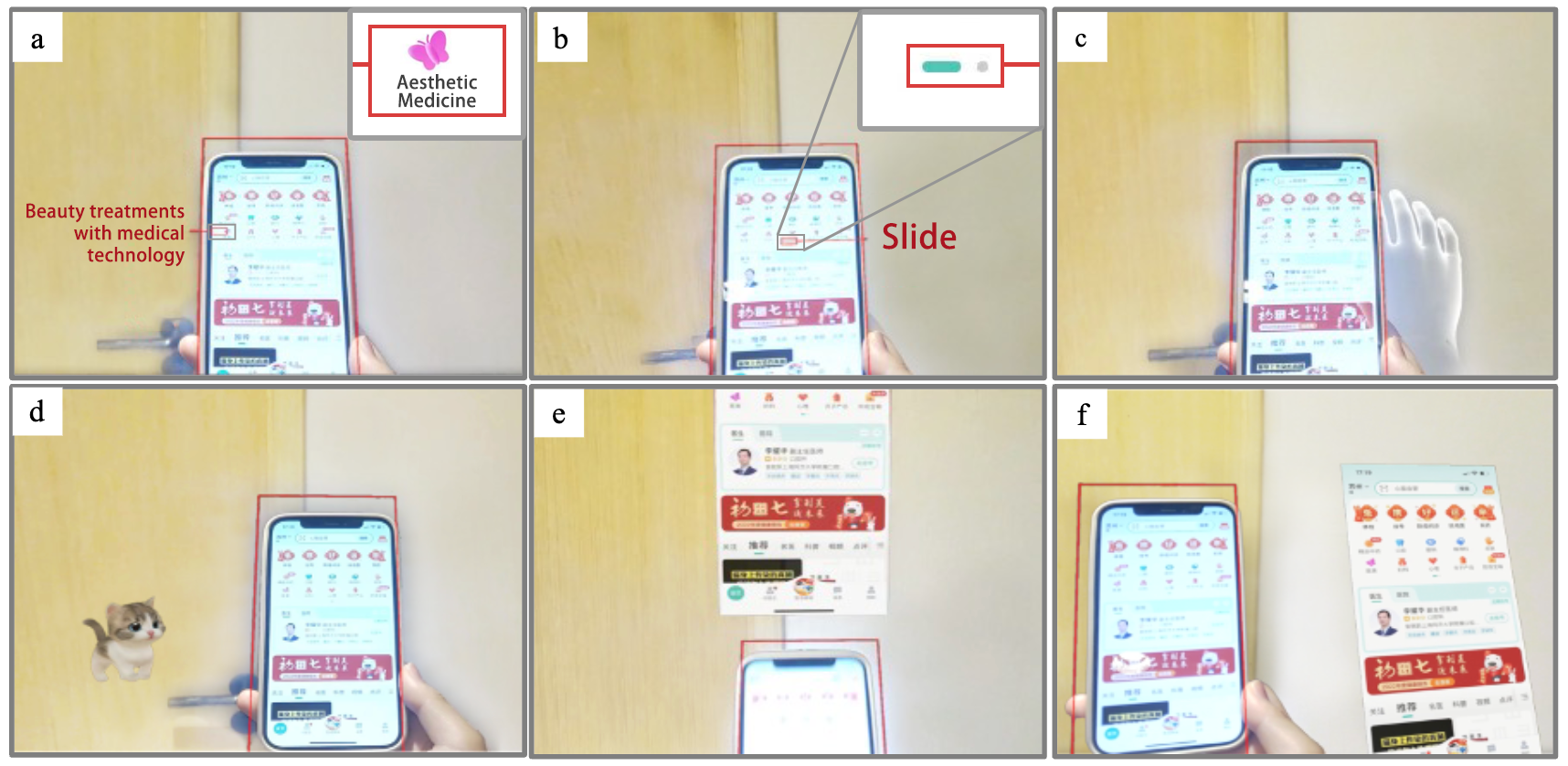

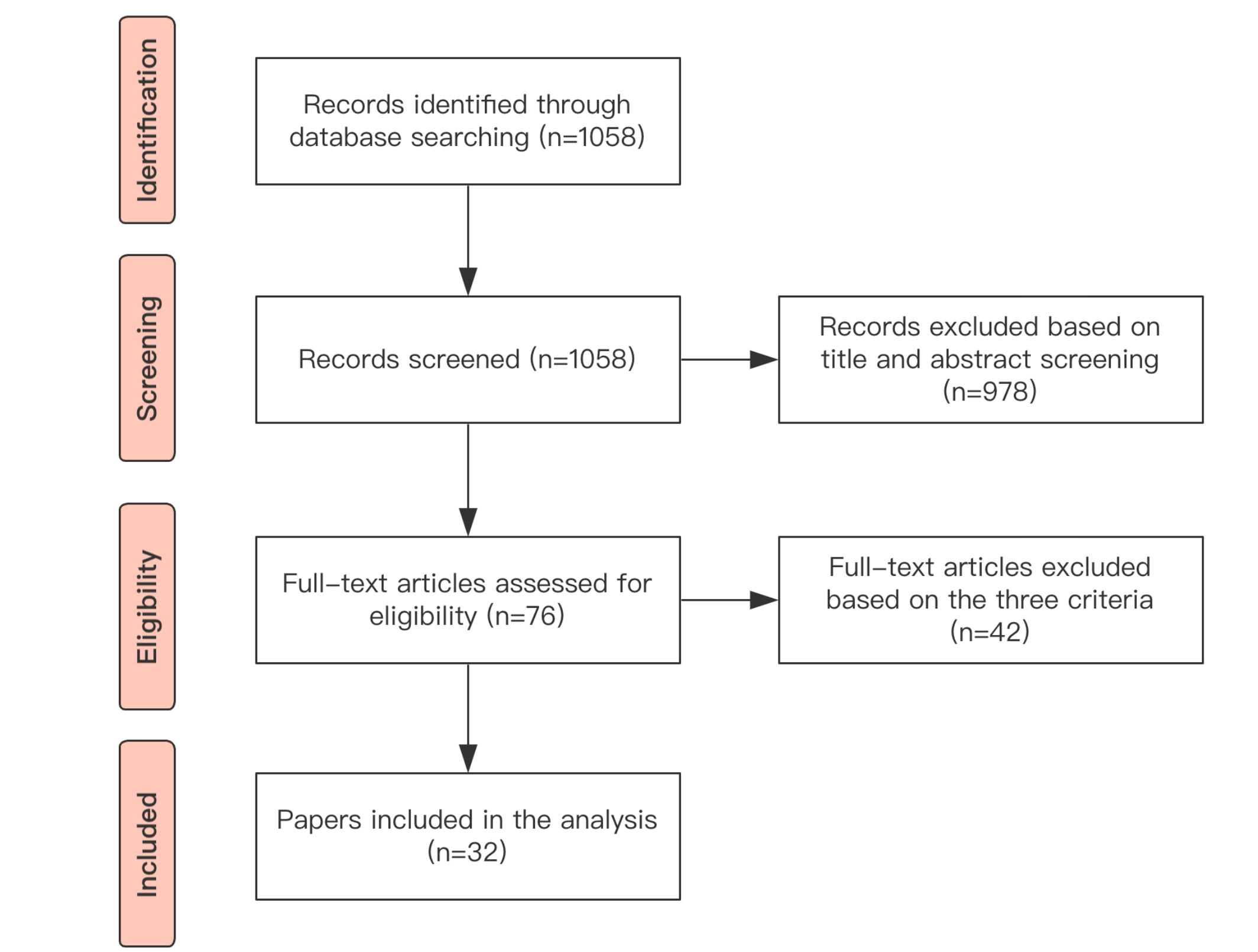

As a Human-Computer Interaction (HCI) researcher, I focus on designing, developing, and evaluating innovative interactive technologies — such as VR, AR, and AI — to enhance social connectedness, promote healthy aging, and explore innovative interaction. By applying a user-centered design approach, along with qualitative research and prototype development, I aim to propose more inclusive and socially impactful interaction technologies. I got my Ph.D. in Computational Media and Arts (CMA) at HKUST in 2025, supervised by Prof. Mingming Fan. I received my Master's degree from the Department of CST at Tsinghua University in 2020 and my bachelor's degree from Xiamen University in 2017. In recent years, I have published multiple academic papers in top-tier HCI conferences, including CHI, CSCW, TVCG, Ubicomp, UIST, and MM, accumulating a wealth of scholarly contributions. I also serve as a reviewer for several prestigious conferences, where I actively contribute to the academic development and exchange within the field. |

|